You’ve found the perfect YouTube video—a conference talk, tutorial, or documentary—but it’s in a language you barely understand. Or perhaps you’re a content creator staring at hours of footage, wondering how to transform it into bite-sized social media posts without spending days on manual transcription. These frustrations are universal in our globalized digital world, where valuable content exists behind language barriers and time constraints. Artificial intelligence has emerged as the game-changing solution, offering instant transcription and translation capabilities that were unimaginable just years ago. These tools don’t just break down language walls; they generate summaries, extract key moments, and repurpose long-form content in minutes. This article will guide you through leveraging AI-powered transcription and translation tools to unlock YouTube’s global content library, reach international audiences, and transform how you consume and create video content.

Why YouTube Transcription and Translation Matters Today

The demand for multilingual video content has exploded as YouTube’s international viewership now accounts for over 80% of total watch time. Creators who ignore this global audience leave substantial growth opportunities untapped. A technology tutorial published only in English might reach millions, but the same video with Spanish, Hindi, and Portuguese translations could potentially triple that reach overnight.

Content repurposing has become essential for maintaining visibility across platforms. A single 30-minute YouTube video can generate dozens of social media posts, blog articles, and email newsletter content—but only if you can efficiently extract the core messages. Manual transcription of that same video would consume 4-6 hours of work, making it economically unfeasible for most creators.

Search engines cannot watch videos, but they devour text. Transcribed content provides searchable keywords that dramatically improve discoverability. Videos with accurate transcripts rank higher in both YouTube’s algorithm and Google search results, driving organic traffic without additional advertising spend.

Accessibility regulations now mandate captions for many commercial and educational videos. Beyond legal compliance, subtitles serve the 466 million people worldwide with hearing disabilities, plus the growing number of viewers who watch videos on mute in public spaces or work environments. AI transcription transforms accessibility from a burdensome obligation into an automated workflow step that simultaneously enhances SEO and audience reach.

Behind the Tech: How AI Video Transcription Works

Modern speech-to-text systems employ neural networks trained on millions of hours of spoken language, learning to distinguish words from audio waveforms with accuracy rates exceeding 95% in optimal conditions. These algorithms analyze audio frequencies, patterns, and contextual clues simultaneously, much like the human brain processes speech. When you upload a YouTube video, the AI first separates vocal tracks from background music and ambient noise using source separation technology, then segments the audio into phonetic units called phonemes before matching them to probable word sequences.

Machine learning models continuously improve through exposure to diverse speech patterns, accents, and speaking styles. Each transcription session feeds data back into the system, refining its understanding of regional pronunciations, technical jargon, and conversational patterns. This self-improving capability means today’s AI handles thick Scottish accents or rapid-fire auctioneers far better than versions from just two years ago.

Advanced systems now automatically insert punctuation by detecting speech rhythm, pauses, and intonation changes that signal sentence boundaries and question marks. The AI recognizes when a speaker’s pitch rises at sentence end, distinguishing “You’re leaving” from “You’re leaving?” without human intervention. Paragraph breaks appear where extended pauses or topic shifts occur, creating readable text rather than walls of run-on sentences.

Real-time transcription processes audio as it streams, delivering text with only 2-3 second delays, ideal for live events or accessibility captions. Post-processing modes sacrifice speed for accuracy, analyzing entire videos with multiple algorithm passes to resolve ambiguous words using full contextual understanding, achieving near-perfect results for pre-recorded content.

AI Translation Engines: Beyond Basic Subtitles

Context-Aware Translation Systems

Modern AI translation goes far beyond word-for-word substitution, employing transformer models that understand entire sentences before rendering them in target languages. When a video mentions “breaking the ice,” context-aware systems recognize this as a social metaphor rather than literal frozen water damage, translating it to culturally equivalent expressions like “romper el hielo” in Spanish or “打破僵局” in Chinese. This contextual intelligence extends to professional terminology, where “cloud computing” in a tech tutorial maintains its technical meaning rather than being mistranslated as meteorological phenomena.

Industry-specific translation models trained on domain corpora handle medical, legal, and technical jargon with precision that generic translators cannot match. A pharmaceutical video discussing “drug trials” correctly translates to clinical testing terminology rather than criminal proceedings vocabulary. These specialized engines recognize that “Python” in a programming context refers to the coding language, not the reptile, adjusting translations accordingly across dozens of languages while preserving technical accuracy.

Real-Time Implementation Challenges

Live translation introduces latency issues where the AI must balance speed against accuracy, typically requiring 3-5 seconds to process speech, translate, and display subtitles without disrupting viewer experience. Synchronization algorithms predict sentence endings to minimize lag, sometimes starting translation before speakers finish, which occasionally produces corrections mid-subtitle when predictions prove incorrect.

Character-based languages like Japanese and Chinese present unique layout challenges, requiring 40% less screen space than alphabetic translations of identical content, forcing subtitle engines to dynamically adjust text positioning and duration. Right-to-left scripts such as Arabic demand specialized rendering that maintains readability while synchronizing with left-to-right video action, requiring careful font selection and background contrast optimization to ensure legibility across diverse viewing devices and screen sizes.

Step-by-Step: Instant YouTube Video Translation Process

Choosing Your AI Video Translator

Web-based platforms offer immediate access without software installation, ideal for occasional users who need quick translations across multiple devices. These browser tools typically charge per minute of video processed or operate on subscription models with monthly quotas. Browser extensions integrate directly into YouTube’s interface, adding translation buttons beneath videos for seamless workflow, though they often provide fewer customization options than standalone applications.

Desktop software delivers faster processing speeds and offline capabilities, essential for creators handling sensitive content or working with unreliable internet connections. When evaluating tools, prioritize language pair support that matches your target audiences—some services excel at European languages but struggle with Asian or African dialects. Processing speed matters significantly: budget tools may require 30 minutes to translate a 10-minute video, while premium solutions complete the same task in under two minutes. Export format flexibility determines usability, with essential options including SRT subtitle files, embedded captions, and editable transcript documents compatible with major video editing platforms.

Transcription and Translation Workflow

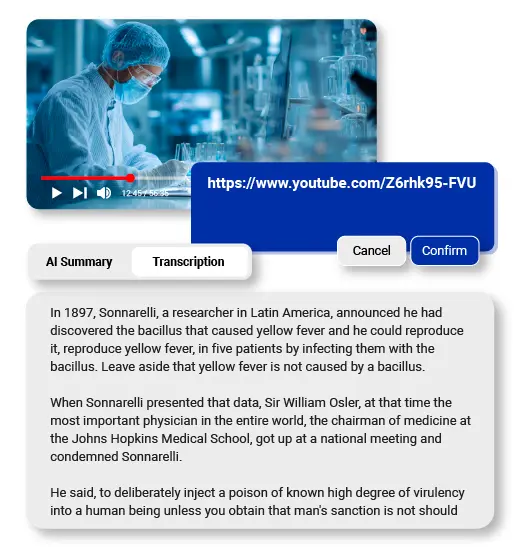

Begin by copying the complete YouTube URL from your browser’s address bar, ensuring you capture the full link including any timestamp parameters if you want to process specific segments. Paste this URL into your chosen tool’s input field, where most platforms will automatically fetch video metadata and duration information within seconds. Select your source language from the dropdown menu—many tools auto-detect this, but manual selection improves accuracy for videos mixing multiple languages or featuring heavy accents.

Choose your target translation language, with advanced platforms allowing simultaneous translation into multiple languages for creators serving diverse international audiences. Processing times vary based on video length and server load, typically ranging from 1-3 minutes per 10 minutes of video content. Most services display progress bars and estimated completion times, allowing you to queue multiple videos or work on other tasks during processing.

The editing interface presents synchronized transcript and translation panels where you can correct transcription errors before they propagate into translated versions. Click any timestamp to jump directly to that video moment, verifying accuracy of technical terms, proper nouns, or ambiguous phrases that AI might misinterpret. Many platforms highlight low-confidence words in yellow or red, flagging sections requiring human review for quality assurance.

Exporting and Implementation

Generate SRT files by selecting the export option and choosing SubRip format, which creates time-stamped text files compatible with virtually all video platforms and editing software. These files contain start times, end times, and subtitle text in plain text format that you can further edit in any text editor if needed. For direct YouTube implementation, download the translated subtitle file and navigate to YouTube Studio, select your video, click Subtitles in the left menu, then upload your SRT file under the appropriate language designation.

Platform-specific considerations require attention to character limits and display duration standards—YouTube recommends maximum 32 characters per line with two-line limits, while TikTok favors shorter bursts of 15-20 characters for mobile viewing. Facebook auto-plays videos muted, making accurate subtitle timing critical for viewer retention in the crucial first three seconds. When embedding subtitles permanently into video files using editing software, ensure font size remains readable on mobile devices by testing at 5-inch screen dimensions before final export.

Creating Shareable Content with AI Summarizers

AI summarizers identify peak engagement moments by analyzing speech patterns, audience retention data, and emotional intensity in voiceovers, automatically flagging segments where creators emphasize key points or audiences rewatch repeatedly. These extracted clips arrive pre-trimmed to ideal social media durations—15 seconds for TikTok, 60 seconds for Instagram Reels, 2 minutes for LinkedIn—complete with suggested captions that capture the core message without requiring context from the full video.

Generating tweetable highlights transforms dense educational content into shareable soundbites that drive traffic back to full videos. The AI identifies quotable statements, surprising statistics, and actionable advice, formatting them with optimal character counts and relevant hashtags based on trending topics in your niche. These micro-content pieces serve as discovery tools, appearing in social feeds where they attract viewers who then seek out complete videos for deeper understanding.

Chapter markers generated from AI summaries improve viewer navigation through long-form content, with the system detecting topic transitions and creating descriptive timestamps like “3:45 – Common Translation Mistakes” or “12:20 – Cost Comparison.” YouTube’s algorithm favors videos with detailed chapters, boosting recommendations while helping viewers jump directly to relevant sections. Blog post transformation converts video summaries into SEO-optimized articles by expanding bullet points into paragraphs, adding transitional phrases, and structuring content with headers that match search intent, effectively doubling your content output from single video recordings.

Top Tools Comparison: AI Video Translation Solutions

Feature matrices reveal distinct strengths across platforms, with some excelling at Asian language pairs while others dominate European translations. Accuracy metrics typically range from 85-98% depending on audio quality and language complexity, with Romance languages achieving higher precision than tonal languages like Mandarin or Vietnamese. Pricing models vary dramatically: entry-level services offer 30-60 minutes monthly free with per-minute overage charges around $0.15-0.30, while professional subscriptions provide unlimited processing at $20-50 monthly with priority server access and advanced editing features.

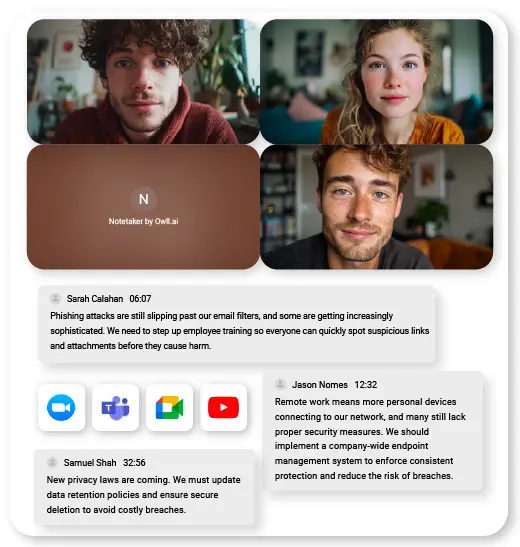

Mobile applications sacrifice processing speed for convenience, typically requiring 3-4 times longer than desktop equivalents but enabling on-the-go translations perfect for quick social media repurposing. Desktop solutions integrate seamlessly with Adobe Premiere, Final Cut Pro, and DaVinci Resolve through plugin architectures that import timestamped transcripts directly into editing timelines, eliminating manual synchronization work. Specialized solutions like Owll AI cater to education creators with automatic terminology glossaries, podcast producers with speaker identification features, and business users with team collaboration workspaces where multiple editors can review translations simultaneously before publication.

Unlock Global Audiences with AI Translation

AI-powered transcription and translation tools have fundamentally transformed how creators and viewers interact with YouTube’s vast content ecosystem. What once required teams of translators and hours of manual labor now happens in minutes, democratizing global content distribution for independent creators and small businesses. These technologies don’t just overcome language barriers—they multiply your content’s lifespan through automated repurposing, improve discoverability through searchable transcripts, and expand accessibility for millions of viewers who rely on captions.

The most effective approach combines automated efficiency with strategic human oversight. Let AI handle the heavy lifting of initial transcription and translation, then invest your time reviewing technical terms, cultural references, and brand-specific language that machines still occasionally misinterpret. Start with your best-performing videos to maximize immediate impact, translate youtube video content into the two or three languages where your analytics show untapped audience potential.

As neural networks continue advancing, expect even more sophisticated features like emotion-aware translations, automatic cultural adaptation, and voice cloning that delivers translated audio in your original speaking style. The creators who experiment with these tools today position themselves ahead of tomorrow’s content landscape. Choose one platform this week, translate a single video, and measure the results—your global audience is waiting.